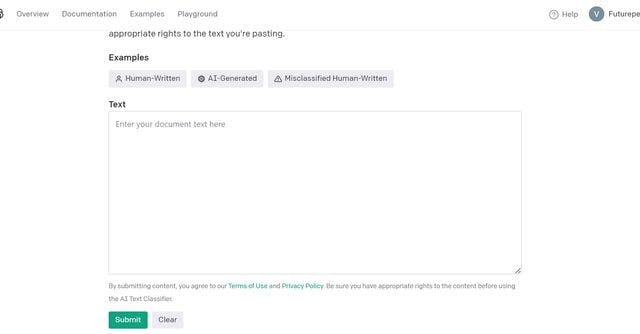

OpenAI has released an AI Text Classifier that predicts how likely it is that a piece of text was generated by AI. The classifier is a fine-tuned GPT model that is trained on English content written by adults. It requires a minimum of 1,000 characters and is intended to spark discussions on AI literacy, but it is not always accurate. Users can submit their text to the classifier to get a result that is either very unlikely, unlikely, unclear if it is, possibly, or likely AI-generated.

The classifier is publicly available, and OpenAI is making it available to get feedback on whether imperfect tools like this one are useful. However, it is not fully reliable, and its reliability typically improves as the length of the input text increases. In evaluations on a “challenge set” of English texts, the classifier correctly identifies 26% of AI-written text as “likely AI-written,” while incorrectly labeling human-written text as AI-written 9% of the time. The classifier performs significantly worse in other languages and is unreliable on code. It is also important to note that AI-written text can be edited to evade the classifier.

Overall, the OpenAI AI Text Classifier is a useful tool for detecting AI-generated text, but it is not always accurate and has limitations.