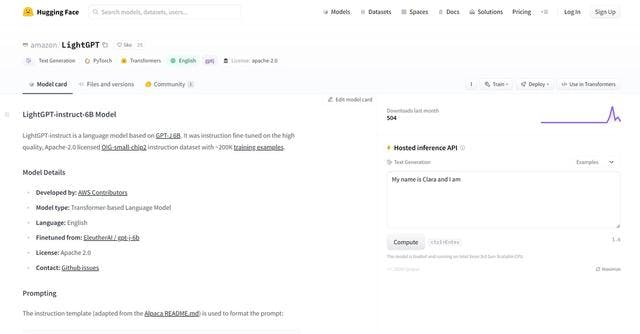

LightGPT is a language model based on GPT-J 6B. It is a transformer-based language model that has been fine-tuned on a high-quality, Apache-2.0 licensed instruction dataset with around 200K training examples. LightGPT is developed by AWS contributors and is available on Hugging Face. The model is primarily designed for natural language generation tasks and can generate responses for a variety of conversational prompts.

Here are some key details about LightGPT

- Developed by: AWS Contributors

- Model type: Transformer-based Language Model

- Language: English

- Finetuned from: EleutherAI / gpt-j-6b

- License: Apache 2.0

LightGPT has some limitations, including the fact that it may fail to follow instructions with long inputs and often gives incorrect answers to math and reasoning questions. Additionally, outputs are often factually wrong or misleading, so users should be aware of this when using the model.

There are several resources available for those interested in using LightGPT, including the LightGPT GitHub repository, the LightGPT page on TopApps.Ai, and the LightGPT page on TheresaNaiforThat.com. AWS also provides high-level APIs to train and evaluate a Lightning-based GPT model based on the data loaded in AutoTokenizer.